For most enterprises, the question is no longer whether to adopt AI. That decision has already been made.

The harder question now is far more consequential: how do you embed intelligence into the organisation without eroding control, accountability, or trust?

Over the past few years, AI entered the enterprise quietly, disguised as productivity tools. Copilots drafted emails, summarised tickets, and answered basic questions. They were helpful, low-risk, and easy to contain. Humans still made decisions; systems merely assisted.

That phase is ending.

Enterprises are now moving toward AI agents, systems capable of interpreting context, making decisions, and taking action across enterprise applications. This shift promises speed and efficiency, but it also exposes a structural weakness many organisations have not yet addressed. Intelligence is advancing faster than the systems designed to govern it.

The MuleSoft A2A Integration Deep Dive surfaced this tension clearly. The discussion was not about models or prompts, but about something far more fundamental: integration.

Why AI Agents Change Risk, Access, and Accountability

An AI agent is not just a more capable chatbot. It is a system that operates in a loop, perceiving signals, deciding what to do next, and acting through enterprise APIs until an outcome is achieved.

This seemingly modest shift has profound implications. The moment an agent can act, questions of authority, access, and accountability move from theory into daily operations.

One principle anchored the entire discussion:

AI agents do not replace enterprise systems. They operate through them.

APIs, workflows, and events remain the machinery of the organisation. Agents simply introduce intelligence into how that machinery is used. But when intelligence is layered onto execution, mistakes scale faster and so do consequences.

Why Scaling AI Becomes an Integration Problem

Most organisations can build a single agent without much difficulty. The challenges emerge when that agent is no longer alone.

As additional agents are introduced, enterprises confront familiar but unresolved questions. How do these agents discover each other? How is responsibility divided? Who has access to which systems, and under what conditions? Most importantly, how are decisions observed, audited, and explained?

These are not questions of model accuracy. They are questions of architecture.

In regulated environments: financial services, retail, customer operations, workflows already span multiple platforms, partners, and policy constraints. Introducing autonomous agents without a unifying integration layer risks creating what many teams quietly fear: a spaghetti of agents, each intelligent in isolation but brittle in combination.

At scale, AI fails not because it is too weak but because it is insufficiently governed.

How A2A enables Controlled Collaboration Between AI Agents

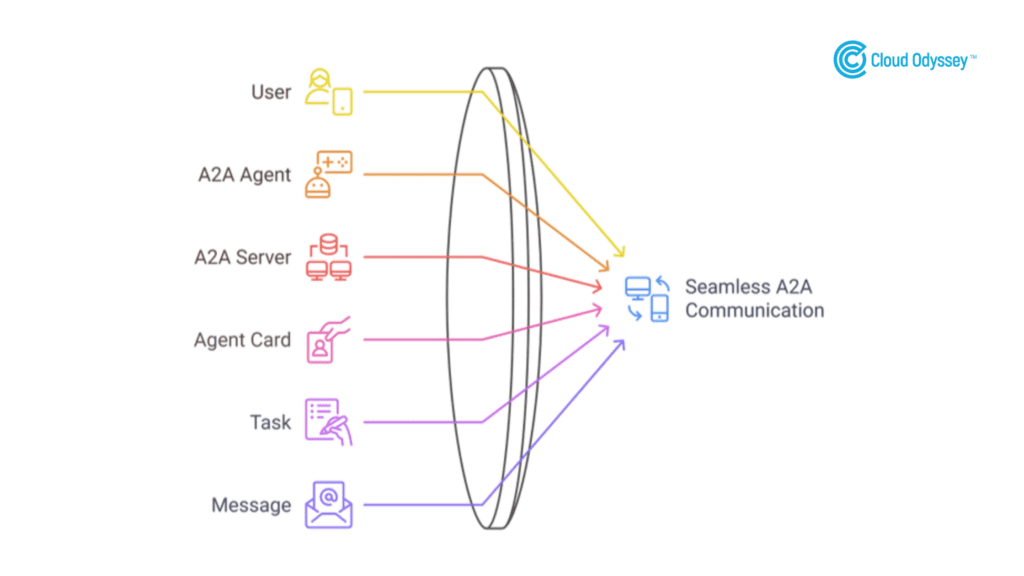

Agent-to-Agent (A2A) is emerging as a response to this challenge.

Rather than treating agent communication as an implementation detail, A2A defines a standard way for agents to discover one another, exchange tasks, and collaborate within explicit security and governance boundaries.

The analogy is instructive. APIs transformed enterprise software by standardising how applications communicate. A2A seeks to do the same for intelligent agents.

This standardisation enables a different design philosophy. Instead of building a single, all-knowing agent, organisations can deploy specialised agents: planners, executors, validators, each with a narrow mandate and clearly defined permissions. Complexity is distributed, but control is retained.

Why Structure Matters More Than Intelligence

In an A2A-based system, agent interactions are intentionally structured. Requests are scoped, responsibilities are explicit, and outcomes are traceable.

This structure may appear constraining, but it is precisely what allows autonomy to scale. Enterprises do not fail because they lack intelligence; they fail because they lack predictability. When agents operate within a known framework, behaviour becomes observable, explainable, and governable.

In practice, this is the difference between experimentation and production.

MuleSoft Integration as the Control Plane for Agentic Systems

As agent ecosystems expand, a new control layer becomes necessary, one that governs not just APIs, but intelligent actors themselves.

MuleSoft occupies this role by extending its integration and governance capabilities into the agentic domain. APIs become tools agents can use. Events become triggers for autonomous workflows. Policies enforce security, compliance, and access. Observability ensures that behaviour can be understood and, when necessary, corrected.

Autonomy is preserved, but it is bounded.

This distinction matters. Enterprises are not seeking freedom from control; they are seeking freedom through control.

Context as the Antidote to Hallucination

As agents take on greater responsibility, the quality of their context becomes critical.

Reasoning models are powerful, but without trusted enterprise data and rules, they are prone to confident error. MuleSoft addresses this through governed connections to enterprise systems and the Model Context Protocol (MCP), which supplies agents with structured knowledge, API schemas, business rules, metadata, and policies.

Context transforms agents from speculative actors into reliable ones. It does not make them smarter; it makes them safer.

A Familiar Question, Revisited

Consider a routine customer enquiry: What is the status of my loan, and when will it be disbursed?

Answering this correctly requires more than data retrieval. It requires understanding approval thresholds, regulatory timelines, and internal risk policies. With proper context and governance, an agent can provide an accurate, compliant response. Without it, the same agent may overpromise, introducing operational and regulatory risk.

This example illustrates a broader truth. As autonomy increases, so does the cost of error.

Agentic Fabric: Designing for Coherence

When agents, integrations, governance, and observability are designed together, they form what MuleSoft describes as an Agentic Fabric.

Rather than treating intelligence as an overlay, this approach embeds agents into the enterprise architecture itself. Discovery, routing, policy enforcement, and monitoring are unified, allowing organisations to scale intelligence without fragmenting control.

The fabric is not about speed alone. It is about coherence.

Why Integration Will Decide the Future of Enterprise AI

The future of enterprise AI will not be decided by who builds the smartest agents. It will be decided by who builds the most disciplined systems around them.

Autonomous agents will increasingly handle operational decisions, customer interactions, and internal coordination. The organisations that succeed will be those that recognise autonomy as an architectural challenge, not a tooling one.

MuleSoft’s evolution, from integrating applications to orchestrating agentic systems, reflects this shift.

The lesson is clear: intelligence without integration creates risk. Integration, thoughtfully applied, turns autonomy into advantage.